The Dawn of a New Era in Cybersecurity

The relentless cat-and-mouse game between cyber defenders and malicious actors has entered a new, transformative phase. For decades, penetration testing—the ethical practice of probing systems for weaknesses—has been a cornerstone of proactive defense, relying heavily on human ingenuity and expertise. Today, artificial intelligence is no longer a futuristic concept but a present-day force actively reshaping this critical field. This article explores the profound impact of AI on the future of penetration testing, examining how it is automating routine tasks, augmenting the capabilities of human experts, and redefining the very nature of offensive security. We will delve into the shift from manual analysis to intelligent automation, the emerging symbiotic relationship between human and machine, and the strategic imperatives for security professionals in this evolving landscape.

From Manual Scripts to Intelligent Systems: The Evolution of Pen Testing

To appreciate the significance of AI’s arrival, it is essential to understand the history of penetration testing. The practice began as a highly manual, artisanal craft, with experts relying on deep system knowledge and custom-written scripts to uncover vulnerabilities. The first wave of innovation brought automated tools like vulnerability scanners and exploitation frameworks, which dramatically increased speed and scale. However, these early tools often lacked context, generating a high volume of false positives and requiring significant human effort to validate findings and identify complex attack chains. They could answer “what” is vulnerable but struggled with “how” and “why” it mattered to the business. This limitation created a clear need for the next logical step: a more intelligent form of automation capable of mimicking human reasoning, learning from its environment, and understanding context—a role that AI is now beginning to fill.

The Core of the Transformation: How AI is Augmenting the Pen Tester’s Toolkit

Accelerating Discovery with AI-Powered Reconnaissance and Vulnerability Scanning

The initial phases of any penetration test—reconnaissance and vulnerability scanning—are often the most time-consuming. They involve meticulously mapping an organization’s digital footprint and sifting through vast amounts of data to identify potential entry points. AI is fundamentally accelerating this process. Machine learning models can now analyze public data, source code repositories, and network traffic at a scale and speed unattainable by humans, discovering overlooked assets and potential weaknesses in minutes rather than days. AI-enhanced static and dynamic application security testing (SAST/DAST) tools go a step further, not just flagging potential code flaws but also learning to prioritize them based on contextual clues. The primary benefit is clear: by offloading these laborious tasks to intelligent systems, human pen testers are freed to focus their cognitive energy on higher-order problems, such as exploiting complex business logic flaws and developing creative attack strategies.

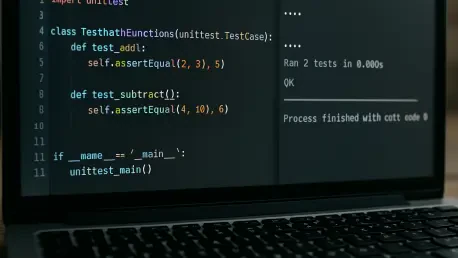

Simulating Sophisticated Attack Paths with Intelligent Automation

Beyond simple discovery, AI is proving its worth in simulating the multi-stage, adaptive campaigns characteristic of modern cyberattacks. Traditional automated tools often test for isolated vulnerabilities, but AI-driven platforms can now intelligently chain exploits together to model a complete attack path, from initial compromise to final objective. These systems, often found in Breach and Attack Simulation (BAS) technologies, can autonomously pivot through a network, adapt tactics based on the defenses they encounter, and even learn from previous tests to become more effective over time. This provides organizations with a continuous, dynamic assessment of their security posture against an intelligent adversary. However, this power comes with risk; an improperly configured or unrestrained AI testing agent could inadvertently cause significant disruption to live production environments, highlighting the need for careful implementation and oversight.

The Human-in-the-Loop Imperative: Bridging the Gap Between Automation and Intuition

Despite these advancements, the notion of AI completely replacing human pen testers remains a distant prospect. AI systems, particularly in their current form, struggle with abstract reasoning, creative problem-solving, and understanding business context. An AI might identify a technically “low-risk” vulnerability, but only a human expert can recognize that its location within a critical financial processing system makes it a severe threat. This is where the “human-in-the-loop” model becomes essential. In this collaborative framework, the human expert acts as an “AI orchestrator,” setting strategic goals, validating the machine’s findings, and executing attacks that require intuition and out-of-the-box thinking. The future of the profession lies not in a human-versus-machine dichotomy, but in a powerful synergy where AI handles the scale and speed, while humans provide the strategic oversight, creativity, and ultimate judgment.

The Horizon Beckons: Future Trajectories for AI in Offensive Security

Looking forward, the integration of AI into offensive security is set to deepen. The rise of Generative AI and Large Language Models (LLMs) promises to further enhance the pen tester’s toolkit, automating the creation of context-aware phishing campaigns, generating custom exploit code for novel vulnerabilities, and drafting detailed, human-readable reports. We can also anticipate the development of “adversarial AI,” where AI agents are trained to actively hunt for and exploit weaknesses in other AI systems, opening a new front in cybersecurity. As AI defenders become more sophisticated, the demand for equally advanced AI-powered offensive tools will grow, sparking a technological arms race that will push the boundaries of both attack and defense.

Adapting for the Future: Strategic Imperatives for Security Professionals

The integration of AI is not just a technological shift; it is a professional one. For individuals and organizations to thrive, they must adapt. Security professionals should focus on upskilling, moving from manual, repetitive tasks toward strategic roles that emphasize critical thinking, threat modeling, and managing AI-driven testing platforms. Businesses must invest in integrated security solutions that leverage AI but also maintain strong human oversight. The most effective strategy will be to build “centaur” teams, where human experts and AI tools work in concert, each amplifying the other’s strengths. Embracing AI as a force multiplier, rather than viewing it as a replacement, will be the key to building a more resilient and proactive security posture.

A New Partnership in the Fight Against Cyber Threats

The infusion of artificial intelligence into penetration testing marked a pivotal moment in the history of cybersecurity. It signaled a move away from purely manual efforts and toward a more intelligent, scalable, and continuous approach to identifying and mitigating risk. While AI brought unprecedented speed and analytical power to the table, it could not replicate the intuition, ethical judgment, and strategic creativity of a human expert. The future, therefore, was not one of automation, but of augmentation. The most effective defense was forged in the partnership between human and machine, creating a formidable alliance that was better equipped to secure our increasingly complex digital world. For security professionals, the call to action was clear: adapt, upskill, and prepare to lead in an AI-augmented future.