The rapid adoption of Artificial Intelligence presents a dual-edged sword for the modern enterprise, promising unprecedented efficiency while being fueled by data that organizations often do not fully understand. This article analyzes the critical trend of AI Data Governance, exploring the dangerous disconnect between the rush to deploy AI and the widespread lack of data awareness, and charts a course for a more secure, data-centric future.

The Current Landscape AI’s Proliferation vs. Data’s Obscurity

The Unstoppable Momentum of AI Adoption

The integration of artificial intelligence into core business functions is no longer a futuristic concept but a present-day reality. An overwhelming majority of businesses are actively deploying AI tools to enhance operations, drive efficiency, and gain a competitive edge. A recent Omdia survey reveals that a staggering 90% of organizations have implemented AI in a cybersecurity capacity, a figure that underscores its deep integration into the most sensitive and critical aspects of enterprise management. This is not a tentative exploration but a full-scale adoption.

This trend is propelled by the increasing accessibility of powerful commercial platforms like Microsoft’s Copilot and IBM’s Watsonx, which have lowered the barrier to entry for sophisticated AI capabilities. Alongside these ready-made solutions, many organizations are developing proprietary in-house models to address specific needs. Consequently, AI adoption has shifted from a strategic option to a competitive necessity, forcing businesses to participate in the AI race or risk being left behind.

The Pervasive Blind Spot in Corporate Data

While the momentum behind AI is undeniable, it masks a fundamental and hazardous weakness within most organizations. The same Omdia survey that highlights high AI adoption also exposes a critical vulnerability: a mere 11% of senior IT decision-makers can confidently account for 100% of their organization’s data. This statistic reveals a massive gap between the ambition to implement advanced technology and the foundational readiness required to do so safely.

Without a complete data inventory, organizations are blindly feeding potentially sensitive information into AI models. In practice, this means trade secrets, customer personally identifiable information (PII), and other regulated data could be inadvertently exposed. The risk is particularly acute when using public-domain AI, where data can be absorbed into the model, leading to irreversible leaks, non-compliance with frameworks like GDPR, and significant operational failures.

Expert Insights on the Inherent Risks and Responsibilities

The Critical Disconnect in High-Stakes Environments

Industry analysis points to a profound and troubling paradox at the heart of the current AI trend. Companies are eagerly deploying a data-dependent technology, artificial intelligence, for a highly sensitive function, cybersecurity, while remaining largely ignorant of the very data that underpins it. This practice creates an exceptionally unstable foundation where the tool intended to enhance security could itself become a vector for catastrophic data exposure.

This disconnect transforms a strategic asset into a potential liability. The AI systems tasked with identifying threats and protecting digital perimeters are only as effective and secure as the data they are trained on. When organizations cannot vouch for the integrity or sensitivity of that data, they are not strengthening their defenses but are instead introducing a powerful, unvetted element into their most critical systems.

The Inevitability of Regulatory and Reputational Damage

Thought leaders in technology and governance warn that the prevailing “deploy now, govern later” approach to AI is an inherently unsustainable strategy. The race for a competitive advantage is leading many to overlook the foundational work of data management, a shortcut that carries immense long-term risk. Pushing unvetted, unclassified data into complex AI systems is not a calculated risk but a gamble with predictable and severe consequences.

The potential fallout extends far beyond technical glitches. The most significant dangers include catastrophic data breaches that expose corporate secrets and customer information to the world. Such events inevitably attract the attention of regulators, leading to severe penalties and fines. Moreover, the loss of customer trust that follows a major data incident can cause irreversible damage to a company’s reputation and market position.

Leveraging AI to Govern AI

Amid these warnings, a key insight is emerging: artificial intelligence itself can be a crucial part of the solution. Experts propose using dedicated, AI-powered “data discovery” tools, deployed securely within an organization’s internal network, to tackle the challenge of data obscurity. These specialized systems are designed to methodically map an organization’s entire data landscape, bringing light to previously unknown information silos.

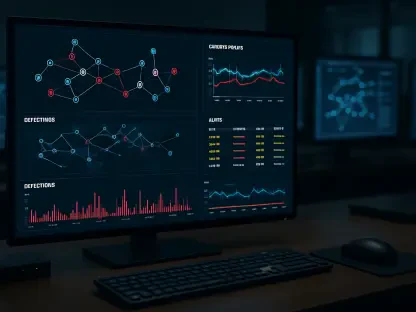

These governance tools can scan and catalog data across on-premises servers, cloud platforms, and SaaS applications, identifying known, unknown, and “shadow” data. Once the data is located, the AI can analyze its content and automatically classify it based on type and sensitivity level. This process provides the comprehensive visibility needed for organizations to make informed, safe, and strategic decisions about which datasets can be used to fuel their broader AI initiatives.

The Future of AI A Mandatory Shift to Data-First Strategies

The Rise of Intelligent Governance Frameworks

The trajectory of AI adoption is set for a fundamental correction, moving away from a technology-centric approach toward a data-centric one. In this new paradigm, best practices will mandate that a comprehensive data discovery and classification audit becomes a non-negotiable prerequisite for any new AI implementation. Organizations will learn that the long-term success of AI depends entirely on the quality and integrity of the data it consumes.

This strategic shift will reframe the central question driving AI projects. Instead of simply asking, “Can we use AI to solve this problem?” leaders will first have to ask, “What data do we have, and which of it can our AI safely use?” This data-first mindset ensures that governance is not an afterthought but the foundational pillar upon which all AI innovation is built, aligning technological ambition with responsible stewardship.

Potential Hurdles and the Cost of Inaction

This evolution will not be without its challenges. Mapping vast, distributed data estates is a technically complex undertaking, and many organizations will face significant organizational inertia resistant to such a foundational change. The comfort of the status quo and the perceived urgency of AI deployment will tempt many to continue with inadequate data governance practices.

However, the consequences of failing to adapt are severe and multifaceted. Companies that neglect robust data governance will find themselves outmaneuvered by competitors who leverage AI more responsibly and effectively. Furthermore, they will remain acutely vulnerable to regulators imposing heavy fines and malicious actors ready to exploit poorly managed data. Inaction is not a neutral stance but a direct path toward competitive and security obsolescence.

The Broader Implications for Regulation and Trust

As AI becomes more deeply embedded in the fabric of society and commerce, government and industry bodies will inevitably introduce stricter regulations. These new frameworks will likely mandate far greater data transparency for any organization deploying AI systems, forcing companies to prove they understand and control the data fueling their models.

In this future landscape, organizations that proactively build robust AI data governance will gain more than just compliance. They will forge a powerful competitive advantage rooted in trust, security, and the ethical use of technology. Demonstrating responsible data stewardship will become a key differentiator, attracting customers, partners, and talent who prioritize safety and integrity in the AI era.

Conclusion From Risky Ambition to Responsible Innovation

The dominant trend revealed a dangerous chasm between the 90% of firms using AI in security and the mere 11% that had a complete handle on their data. This profound disconnect was unsustainable and exposed businesses to severe operational, financial, and reputational risks. The rush to adopt a powerful technology without mastering its foundational element—data—created a paradox where tools meant to enhance security became potential liabilities.

The transformative power of AI can only be harnessed safely and effectively when built upon a foundation of complete data intelligence. Before organizations release their data into the next generation of AI tools, they must first invest in understanding it. Bridging the gap between AI ambition and data awareness is the most critical strategic imperative for any organization seeking to thrive in the AI era.