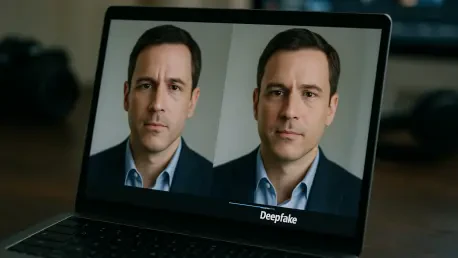

In an era where technology evolves at breakneck speed, the emergence of deepfake technology as a corporate cybersecurity threat has sent shockwaves through industries worldwide, posing unprecedented risks to financial stability. These AI-generated synthetic media, encompassing manipulated videos and audio, have shifted from mere online novelties to powerful tools of deception that can replicate voices and faces with chilling accuracy. For corporations, this erodes trust within organizations and jeopardizes the security of digital communications. As reliance on virtual platforms and remote work continues to grow, the potential for deepfake scams to infiltrate business operations becomes increasingly alarming. This article delves into the escalating danger of deepfake attacks in corporate environments, examines the vulnerabilities they exploit, and highlights the urgent need for innovative strategies to protect enterprises. By exploring both technological and human-centric solutions, a clearer path emerges to navigate the challenges of the AI-driven landscape.

Escalating Dangers of AI-Driven Fraud

The rapid rise of deepfake scams targeting corporate entities has transformed them into a pressing concern for businesses across all sectors. Reports indicate that these attacks occur with alarming frequency, with devastating financial consequences, such as a notable case where a fraudulent video conference led to a loss of $25.6 million. Cybercriminals have honed their focus on impersonating executives and key personnel, exploiting digital communication channels to orchestrate unauthorized transactions or gain access to sensitive data. This trend reveals a sophisticated shift in cybercrime, where AI is leveraged not just for disruption but for direct monetary gain. The sheer scale of these incidents underscores the critical need for companies to recognize deepfakes as a mainstream threat rather than a peripheral risk, prompting a reevaluation of existing security frameworks to address this evolving menace.

Beyond the financial toll, the reputational damage caused by deepfake scams can be equally crippling for corporations. When employees or stakeholders are deceived by hyper-realistic fabrications, trust in leadership and internal communications begins to crumble, potentially leading to long-term relational fractures. The ripple effects often extend to clients and partners who may question the integrity of a business compromised by such fraud. Moreover, the legal and regulatory fallout from these incidents can impose additional burdens, as companies grapple with accountability for breaches enabled by synthetic media. Addressing this multifaceted threat requires more than just reactive measures; it demands a proactive stance that anticipates the next wave of AI-driven deception. As cybercriminals continue to refine their tactics, businesses must stay vigilant, ensuring that defenses evolve in tandem with the sophistication of these attacks.

Exploiting Remote Work Vulnerabilities

The transition to remote and hybrid work environments has inadvertently created fertile ground for deepfake perpetrators to exploit. With employees scattered across locations and unable to verify identities through in-person interactions, the likelihood of falling victim to manipulated video calls or audio recordings increases significantly. Such scams can disrupt critical workflows, delay decision-making processes, and sow seeds of doubt among team members who question the authenticity of digital exchanges. This vulnerability highlights a stark reality: the very tools that enable seamless virtual collaboration also serve as entry points for fraudsters. Companies must therefore reassess how reliance on digital platforms, while essential for modern operations, amplifies the risk of AI-generated deception in ways previously unimaginable.

Compounding this issue is the psychological impact on employees who may become overly cautious or skeptical of legitimate communications due to the fear of deepfake scams. This erosion of trust can hinder productivity and collaboration, as teams hesitate to act on instructions delivered through virtual channels. Additionally, the lack of standardized protocols for verifying identities in remote settings often leaves organizations exposed to exploitation. Addressing these challenges requires a blend of technological innovation and policy reform to ensure that remote workforces are equipped to distinguish between genuine interactions and sophisticated fakes. By prioritizing secure communication practices and fostering an environment of verification, businesses can mitigate the risks posed by the intersection of remote work and AI-driven fraud, safeguarding both operations and morale.

Dual Role of AI in Deepfake Challenges

Artificial intelligence stands at the crossroads of the deepfake crisis, acting as both the catalyst for increasingly convincing scams and the key to developing robust countermeasures. On one hand, advancements in AI have democratized access to tools that create hyper-realistic synthetic media, enabling even less-skilled cybercriminals to produce content that deceives the untrained eye or ear. This accessibility has fueled a surge in targeted corporate attacks, where forged videos or voice clones impersonate leaders to authorize fraudulent transactions. The realism of these fabrications often bypasses traditional security checks, making them a formidable weapon in the arsenal of digital fraudsters. Understanding this dual nature of AI is essential for corporations aiming to protect themselves from the very technology that drives their innovation.

Conversely, AI also offers promising solutions to combat the threats it helps create, marking a technological arms race in the cybersecurity domain. Innovations such as biometric authentication, liveness detection, and adaptive risk-based systems for financial transactions provide cutting-edge methods to identify and block synthetic content before it causes harm. These tools analyze subtle discrepancies in media that human observers might miss, offering a layer of defense that evolves alongside the sophistication of deepfake techniques. However, implementing these solutions requires significant investment and a commitment to staying ahead of rapidly advancing threats. Enterprises must integrate AI-driven defenses into their broader security strategies, ensuring that technology serves as a shield rather than solely a sword in the hands of adversaries. This balance is critical to maintaining trust and integrity in digital interactions.

Strengthening Defenses Through Human Vigilance

While technological advancements are indispensable, the human element remains a vital component in fortifying corporate defenses against deepfake threats. Educating employees to recognize red flags in unexpected communications—such as unusual requests or inconsistencies in tone—builds a crucial first line of resistance against AI-generated scams. Training programs should emphasize the importance of skepticism, encouraging staff to verify suspicious interactions through alternative, secure channels before taking action. This heightened awareness can prevent costly mistakes, especially in scenarios involving financial transactions or sensitive data. By fostering a culture where questioning authenticity is standard practice, companies can empower their workforce to act as active participants in cybersecurity rather than potential vulnerabilities.

Beyond awareness, establishing robust internal policies further strengthens an organization’s resilience to deepfake risks. Multi-layered verification processes for high-stakes decisions, such as financial approvals or access to confidential systems, ensure that no single point of failure can be exploited. Secure communication protocols, including encrypted channels and standardized identity checks, add another barrier against deception. These measures, when consistently enforced, create an environment where fraudsters find it increasingly difficult to penetrate organizational defenses. Combining human vigilance with structured guidelines allows businesses to address the nuanced nature of deepfake threats, recognizing that technology alone cannot fully mitigate risks stemming from human judgment. This holistic approach is essential for sustained protection in an AI-dominated landscape.

Forging a Resilient Future Against AI Fraud

Reflecting on the deepfake menace, it has become evident that enterprises must confront a multifaceted challenge that strikes at the core of digital trust. The staggering financial losses, amplified vulnerabilities in remote setups, and the dual-edged role of AI have reshaped the cybersecurity landscape, demanding urgent action. Businesses that take decisive steps to integrate advanced authentication tools like biometric systems and liveness detection gain a significant edge in thwarting synthetic media attacks. Simultaneously, those who prioritize employee training and robust verification policies fortify their human defenses, proving that technology and awareness are most effective when paired. The lessons learned underscore a pivotal truth: staying ahead of AI-driven fraud requires constant adaptation and investment in both innovation and education.

Looking ahead, the path to resilience lies in anticipating the next evolution of deepfake tactics through proactive measures. Enterprises should consider forming dedicated task forces to monitor emerging AI threats and update defenses accordingly. Collaborating with industry peers to share insights and best practices can also amplify collective security, creating a united front against cybercriminals. Additionally, advocating for stricter regulations around synthetic media could deter malicious use while fostering ethical AI development. As the technological arms race continues, businesses must commit to a dynamic strategy that evolves with the threat landscape, ensuring that trust in digital interactions is not only preserved but strengthened for the future.