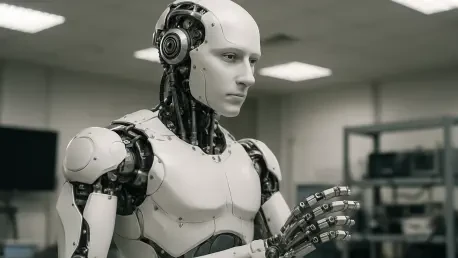

As the world stands on the cusp of an automated revolution, with advanced humanoid robots poised to integrate into factories, homes, and public spaces, a stark warning from cybersecurity analysts casts a long shadow over this promising future. Beneath the polished chrome and sophisticated artificial intelligence lies a fundamental design flaw, a paradox so deeply embedded in the technology that it threatens to turn these marvels of engineering into vectors for unprecedented digital and physical disruption. The race to build the future of labor may be inadvertently building a global infrastructure of insecurity.

This is not a distant, theoretical problem. The core issue revolves around a critical trade-off being made at the very heart of robotic design, where the need for instantaneous physical response time is being prioritized over essential cybersecurity protocols. This decision, driven by both market pressures and the laws of physics, is creating a new and vulnerable attack surface. The consequences extend far beyond data theft, venturing into the realm of physical manipulation, corporate espionage on a massive scale, and a geopolitical power struggle for control over the next generation of automated technology.

The Billion-Dollar Question Is the Robot Revolution Built on a Foundation of Sand

The economic stakes fueling the rapid development of humanoid robotics are staggering. Leading financial institutions, including Morgan Stanley and Bank of America, project a future where the global population of these machines could swell from tens of thousands to hundreds of millions by 2050. This expansion is driven by the transformative promise of drastically reducing manufacturing costs by replacing manual labor, a vision that has ignited a multi-trillion-dollar market forecast and attracted immense capital investment.

However, as capital pours into this burgeoning industry, experts are raising alarms that this entire enterprise is being constructed on a dangerously weak foundation. The immense pressure to innovate and deploy functional hardware quickly has relegated cybersecurity to an afterthought. This neglect has created a systemic vulnerability hidden within the core architecture of the robots themselves, threatening the stability and security of the very revolution investors are betting on. The rush to market is creating a generation of technology that may be brilliant in function but disastrously fragile in its defense.

A New Cold War The Geopolitical Race for Robotic Supremacy

The competition to lead the humanoid robotics industry extends far beyond corporate boardrooms and into the arena of global strategic interests. Nations now view dominance in this sector as a critical component of economic and military power, creating a high-stakes geopolitical race that mirrors the technological standoffs of the past. The drive to achieve supremacy in “embodied AI” is not merely about market share; it is about controlling the future of manufacturing, logistics, and even national security.

This intense rivalry is particularly evident in national industrial policies. According to Joseph Rooke, a risk insights director at Recorded Future, nations are actively competing for an edge. China, for example, has formally designated embodied AI as a strategic priority in its 15th Five-Year Plan. This commitment is underscored by a surge in innovation, with the country generating over 5,000 patents that mention the term “humanoid” in the last five years alone. This hyper-competitive environment accelerates development but also fosters a climate where security is secondary to speed, creating fertile ground for state-sponsored espionage and supply chain vulnerabilities.

The Two-Pronged Threat Hacking the Creators and the Creations

The cybersecurity risks facing the robotics industry manifest as a dual-front war, targeting both the companies that design the robots and the machines they produce. The first front is a campaign of sophisticated corporate espionage. State-sponsored threat actors are actively infiltrating the networks of robotics manufacturers to steal valuable intellectual property. Recorded Future has observed numerous such campaigns since late 2024, noting that these attacks rely not on exotic, robot-specific exploits but on common, off-the-shelf information-stealing malware. Tools like the Dark Crystal RAT (DcRAT), AsyncRAT, XWorm, and the Havoc framework are being deployed to siphon away proprietary designs and trade secrets.

More alarmingly, this digital infiltration is a precursor to a far greater threat: supply chain compromise. Analysts warn that after stealing IP, the logical next step for these actors is to embed malicious code or hardware into the robots during the manufacturing process, a tactic already seen in the semiconductor industry. Simultaneously, the second front of this war involves direct attacks on the robots. Researchers have already demonstrated tangible exploits on commercial models. Víctor Mayoral-Vilches, founder of Alias Robotics, has showcased these vulnerabilities by gaining root access to robots from Unitree, a prominent manufacturer. His team even created a self-propagating worm capable of spreading from one robot to another via Bluetooth, highlighting the potential for widespread, automated compromise. Their work also uncovered severe privacy flaws, with internet-connected robots transmitting sensitive system data to overseas servers without user knowledge or consent.

Access Control and Prayer An Industrys Alarming Cybersecurity Immaturity

A profound cultural deficit within the robotics industry is exacerbating these technical vulnerabilities. According to Mayoral-Vilches, there is a shocking lack of basic cybersecurity awareness among robotics companies. Many developers and executives are unfamiliar with fundamental security concepts, including the system for tracking Common Vulnerabilities and Exposures (CVEs). This ignorance translates directly into inaction; while robot security is acknowledged as a “cool” idea, it rarely receives the necessary investment or prioritization.

This cultural immaturity has led to a prevailing security posture best described as “access control and prayer.” Companies may implement rudimentary controls on a robot’s external communications, but its internal systems are often left completely open and unprotected. Once an attacker bypasses the perimeter, they typically find a transparent and vulnerable internal network, allowing them to move freely and take control of critical functions. The problem is further compounded by the industry’s reliance on the Robot Operating System (ROS), a foundational software platform built on technologies that are themselves inherently flawed. This means that even well-intentioned security efforts are often built upon a weak base, making it relatively easy for a determined adversary to “break down those layers.”

The Speed Paradox When Security Becomes a Liability

The most significant obstacle to securing humanoid robots is not negligence alone but a fundamental conflict at the core of their engineering. A robot’s ability to walk, balance, and interact with its environment depends on a critical process known as the “control loop”—the cycle of sensing the environment, computing a response, and actuating a physical movement. For a bipedal robot to remain stable, this entire loop must execute in milliseconds. Any significant delay can result in a catastrophic physical failure, such as falling over or crashing into an object.

Herein lies the paradox: the foundational tools of modern cybersecurity, such as robust encryption and authentication protocols, inherently introduce latency. A 100-millisecond delay that might be a minor inconvenience in a web application could be disastrous for a robot’s stability. Faced with this choice, developers consistently prioritize operational speed and physical safety over digital security, effectively designing insecurity into the system from the start. This trade-off makes patching existing systems insufficient and necessitates a complete overhaul of the industry’s approach to security.

The resolution to this paradox required a fundamental cultural and architectural shift that the industry was just beginning to contemplate. Experts concluded that securing the next generation of robotics demanded the integration of modern cybersecurity principles, such as zero-trust architectures and granular access controls, directly into the foundational design. The challenge was no longer theoretical; it had become an urgent prerequisite for safely deploying these powerful machines into society. The journey toward a secure robotic future was one that had to be built on a new foundation, where functionality and safety were finally treated as inseparable.